I have a Sense Energy Monitor but would really like a local solution, ideally Z-Wave as a bunch of my house is already Z-wave-d. Aeotec makes a whole house energy monitor but I need more clamps to monitor things like my HVAC system which can't be tracked with smart plugs.

The Sense learning for appliances hasn't worked all that well with variable loads and it seems to have trouble discerning between the various heat pumps in the house.

IoTaWatt was previously out of stock for a while. It's wifi, but can accommodate more clamps.

HVAC Journey: Mitsubishi Equivalent Pipe Length

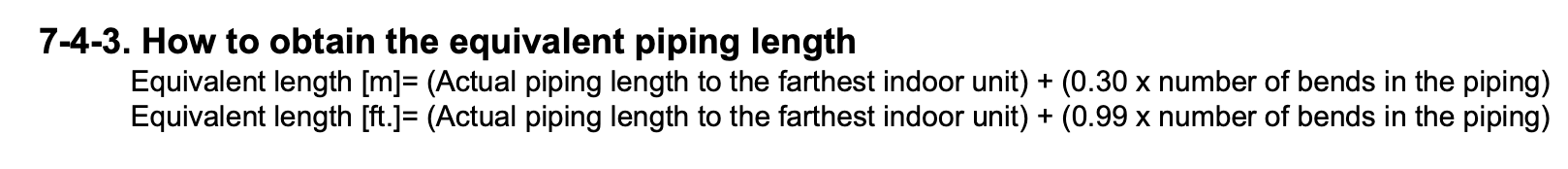

Posted onWhile trying to debug problems with my HVAC system I came across "equivalent pipe length". Mitsubishi seems to omit this from installation manuals but includes it in their engineering manuals.

Equivalent Pipe Length accounts for the pressure drop of fittings, valves, or bends in a line to help simplify limits for a system. If a system has a max line length of 100' the measured distance of 100' would assume no additional parts in line to drop the pressure. If you add fittings, valves, or bends then the measured length would need to be below 100' to stay within the system limits specified as equivalent pipe length.

An example

As a practical example. Here is my system (as best I could model in Diamond System Builder):

I have a single outdoor unit with 4 indoor units: a central air handler and 3 mini splits. The lengths listed in this diagram are the measured lengths. The system will calculate the equivalent pipe length automatically and check it against the equipment specifications listed, pretty cool.

I cannot however correctly model my system in Diamond System Builder though as the measured length for the Front Bedroom is 84', with 6 bends.

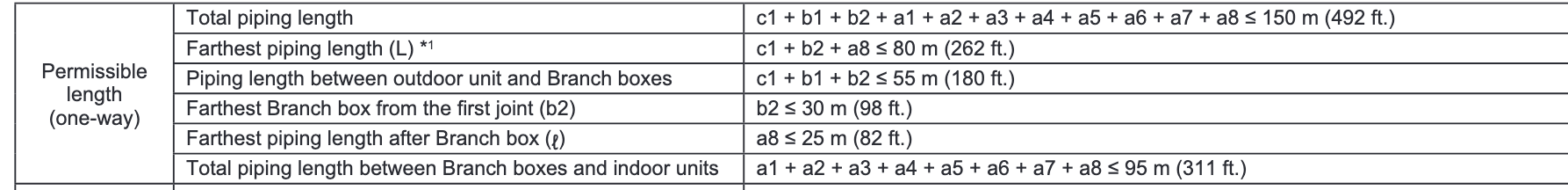

For my outdoor unit. The installation manual states the Farthest piping length after Branch box of 82'. The 82' is the equivalent pipe length NOT the measured pipe length.

I've confirmed with a Mitsubishi representative that the installation manual uses the equivalent pipe length, not the measured pipe length. This aligns with the System Builder where I can input 82' if I use 0 bends. The max length I can use with the 6 real-world bends in my system is 76'.

In the engineering manual there is a calculation for the equivalent pipe length on this system:

Which is why I can only input 76' feet with 6 bends (76' + 6 * 0.99' = 81.94').

How would someone know

I'm not sure how someone would know the installation manual uses equivalent pipe length. Maybe this is covered in training for Mitsubishi equipment. My Mitsubishi Diamond Elite contractors seem not to know about it though. Or at least this didn't seem to stop them from installing this equipment in what appears to me to be out of spec from the manufacturer.

The scale of hyperscaler datacenters and this cut back are difficult to comprehend. I guess with these cuts there still isn't a general-public killer-app for AI yet, or even planned.

I've on and off again spent time trying to find some replacement for Postgres for software I write. Postgres is wonderful but is also a heavy requirement for lots of little homelab services. I tend to run a Postgres instance for each service which takes quite a bit of memory and maintenance overhead.

In Rust-land there is DataFusion that has a SQL interface for querying and inserting data. Inserting data can be a bit messy though and seems very much not to be the focus.

DataFusion is integrated well with Delta though! Delta appears really interesting! It can use memory, filesystem, or remote storage. It supports write fairly well, including (fairly manually) the maintenance items that folks complain about with Postgres (compaction/vacuuming).

It took a day but I have a working prototype of creating a table on Minio, inserting data, and querying the table. Examples on Delta's site seem out of date and overly verbose at the same time.

I ran into a problem with my homelab Minio cluster where a single node was able to corrupt the ls of a bucket. With that node turned off ls showed the latest files, with the node running ls wouldn't list any files added in the last month. Writes were fine in either case, other than not being ls-able. A manual heal on the cluster seemed to fix it fine enough.

HVAC Journey: Wall Mounted Mitsubishi Compressor

Posted onAt the end of 2024 my family started a project to remove natural gas / methane service from our house. As part that we began to replace our gas furnace with a heat pump.

The contractor mounted the outdoor unit on the wall of our house in a side alley that kept it out of the way.

This helps save space on the ground and mounting a couple feet above ground gives space for the condensate to drain when in the unit is heating our house. This does not help when the unit is a source of vibration and with the mounting causes structural vibrations in my house.

Mitsubishi has a helpful application note regarding the frequencies of the compressor at various times. During startup of a cycle in the 20-50 Hz range my house would vibrate and could be felt in various rooms through the house.

Pads (the blue/black pieces) were placed in the mounting to help address the issue.

They... might have helped. It's hard to say, I don't really have the tools to measure structural vibration. They did not help enough to avoid driving me crazy every time the compressor started.

What did solve the problem was moving the mounting to the ground.

This addressed the structural vibration issues to the point that I could no longer feel anything in my house.

Trying out the ‘deepseek-r1:7b’ model so far has been interesting. I’ve liked using it for exploring how to approach a problem in order for me to explore making an end decision.

Thankful for a Reddit post that SmartHQ appliances need a 2.4ghz network. The SmartHQ app isn't all that helpful with it's error messages.

I've had trouble with connections from my phone with WireGuard for a while. For maybe 2 years it wasn't a big enough issue to worry about. Finally looking, it seems to be an MTU issue that winds up causing problems for some sites, like Duck Duck Go. When I had that set as my default search engine it feels like the Internet is broken.

Setting the MTU to 1420 in my router WireGuard config seems to have fixed it! It was previously at 1200... for a reason I cannot recall.

The video is worth watching as well!

I've added some sanity checks my common Github Actions when I build Docker containers to run the -h of a tool after building the image. I've a couple times been bitten by shared lib versions across build vs runtime base images. This at least verifies that the binary is in place and works!

- name: Build

uses: docker/build-push-action@v6

with:

platforms: ${{ inputs.docker_platforms }}

context: ${{ inputs.context }}

cache-from: type=gha

cache-to: type=gha,mode=max

load: true

tags: local-build:${{ github.sha }}

push: false

- name: Check Container

if: inputs.check_command != ''

run: |

docker run local-build:${{ github.sha }} ${{ inputs.check_command }}

I'm running into this issue with my local Docker Registry where things seem corrupted after a garbage collect. I though it was how I was deleting multi-arch images, but maybe not! I'm just disabling garbage collection for now in my system.

I'd love to try out Oriole DB for the decoupled storage for running Postgres. Keeping all data on S3/Minio would ease my DB management for disks and remove any reliance on a remote filesystems for Postgres.

The current limitation of While OrioleDB tables and materialized views are stored incrementally in the S3 bucket, the history is kept forever. There is currently no mechanism to safely remove the old data. prevents me from running it fully though. I'll have to keep an eye on the dev / next release.

Haven’t heard of sigstore before. Need to keep this list around for next time I see someone recommending PGP

With 0.0.9 of declare_schema I'm starting to fail if migrations cannot be run. At the moment this tends to be room for improvement on implementation vs limitations in Postgres.

Current limitations:

- Cannot modify table constraints, as of 17, only foreign keys can be anyway

- Cannot modify indexes. I'd like to improve this but it's also super messy. Index creation can take a while. I'm not sure how I'd like to handle this so the method at the moment is now to create a new index an later drop the old one.

I'm starting to get fairly confident in my usage of it. Going forward I hope to work more on docs and examples.

I've been meaning to try out this IdType trait pattern. My SQLx usage so far somewhat benefits from different structs for to-write-data and read-data so I haven't quite gotten around to testing it out.

via

Some of my projects recently failed during lockfile updates with:

error "OPENSSL_API_COMPAT expresses an impossible API compatibility level"

The lockfile updates included openssl-sys-0.9.104. I remember not really needing OpenSSL at all, leaning to use rust-tls for the most part. This was the push I needed to figure out why OpenSSL was still being included.

reqwest includes default-tls as a default feature, which seems to be native-tls a.k.a OpenSSL. Removing default features worked for my projects

reqwest = { version = "0.12.4", features = ["rustls-tls", "json"], default-features = false }

While upgrading to Postgres 17 I ran into a few problems in my setup:

- I didn't update

pg_dumpas well, so backups stopped for a few days pg_dumpfor Postgres 17 (in some conditions? at least my setup) requires ALPN with TLS.

From the release notes:

Allow TLS connections without requiring a network round-trip negotiation (Greg Stark, Heikki Linnakangas, Peter Eisentraut, Michael Paquier, Daniel Gustafsson)

This is enabled with the client-side option sslnegotiation=direct, requires ALPN, and only works on PostgreSQL 17 and later servers.

I run Traefik to proxy Postgres connections, taking advantage of TLS SNI so a single Postgres port can be opened in Traefik and it will route the connection to the appropriate Postgres instance. Traefik ... understandly... doesn't default to advertising that it supports postgresql service over TLS. This must be done explicitly.

In Traefik I was setting logs such as tls: client requested unsupported application protocols ([postgresql])

From pg_dump the log was SSL error: tlsv1 alert no application protocol "postgres"

Fixing this required configuring Traefik to explicitly say postgresql was supported.

# Dynamic configuration

[tls.options]

[tls.options.default]

alpnProtocols = ["http/1.1", "h2", "postgresql"]

This as documented, is dynamic configuration. It must go in a dynamic config file declaration, not the static. In my static config I needed to add

[providers]

[providers.file]

directory = "/local/dynamic"

watch = true

Where /local/dynamic is a dir that contains dynamic configuration. I was unable to get the alpnProtocols set with Nomad dynamic configuration. I always ran into invalid node options: string when Traefik tried to load the config from Consul. Maybe from this

‹ Previous Next ›