Time to upgrade nix on my Macbook. Ran into

---- oh no! --------------------------------------------------------------------

It seems the build user _nixbld15 already exists, but with the UID

'31008'. This script can't really handle that right

now, so I'm going to give up.

during the install. I just ran through the users deleting them to let the installer figure it out afterwards

sudo /usr/bin/dscl . -delete /Users/_nixbld15

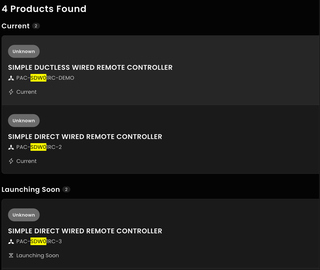

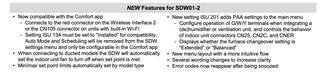

Looks like the replacement for the PAC-SDW01RC-1 thermostat is finally coming out! The PAC-SDW01RC-2! But also there is a 03 launching soon!

I've had problems with the -1 where a power cycle would cause the thermostat to read from the incorrect temperature sensor, switching from the thermostat to the sensor inside the air handler. This lead to being 5-10 degrees off from the room temperature! I'm waiting on my HVAC contractor to get a unit and try replacing it.

Besides the fix, I'm glad to see WiFI integration as well! I don't use the Kumo Cloud unit but instead use a ZWave connector with a minisplit. I'd like to get the air handler integrated.

No idea with the 3 revision does though!

With Github recently having issues it was a nice time to see what other options folks recommend. I've run forgejo for a little bit but it always feels like a bit too much for solo projects.

Two new ones mentioned that I haven't seen before were tangled and Radicle. tangled seems like a nice integrated model but self hosting the knot is only one piece of the system. Radicle seems like a farther departure from usual forge systems.

I added a repo to each Radicle and tangled. The tangled story for CI seems nice with Nix based runner. The Radicle system is less developed.

I'm giving Restic a go for backups with a NixOS system. The integration on NixOS is pretty easy to set up and backing up to Minio was painless. I still need to work on the sequencing from services that won't work with just a disk copy (sqlite mostly I think).

I've mostly seen people ask about Borg in the self-hosting community, but I wanted something S3-compatible natively and Borg doesn't seem to fit that.

There is a fork of the Minio Console UI that is bringing back the recently removed features! I haven't given it a try but might be the next bits I setup in my homelab to give it a spin. The lack of SSO in Minio's "new" UI has been annoying.

It looks like Minio is enshitifying

`Minio strips away almost all features from AGPL interface and suggests people use their licensed "AIStor" service instead

I use Minio a bit for storage to avoid reliance on a single node and being able to scale up storage more than a single server can handle. I never really loved Minio, but it seems that Garage replicates and SeaweedFS requires a more complex setup to replicate the filer.

I joined Obsidian Catalyst just to try out the Bases plugin. So far it's worth it, might fill the void for a self-hosted, plaintext Notion Database replacement!

Something went wrong on a homelab server and Docker stopped cleaning up old overlays.

Running docker system prune -a -f (via) seemed to fix it.

Total reclaimed space: 149GB :tada:

I'm excited to see other folks interested in a Limbo/SQLx integration.

SQLx + support for an S3 store falls close to my ideal use case for application DB usage.

It's nice to see more OIDC services coming out that (presumably) work well for self-hosting. Pocket-ID (via This Week in Self-Hosted and Rauthy.

I'm still using kanidm with no need to switch, but it's nice to see maybe-easier things to recommend for other folks to try!

I have a Sense Energy Monitor but would really like a local solution, ideally Z-Wave as a bunch of my house is already Z-wave-d. Aeotec makes a whole house energy monitor but I need more clamps to monitor things like my HVAC system which can't be tracked with smart plugs.

The Sense learning for appliances hasn't worked all that well with variable loads and it seems to have trouble discerning between the various heat pumps in the house.

IoTaWatt was previously out of stock for a while. It's wifi, but can accommodate more clamps.

I've on and off again spent time trying to find some replacement for Postgres for software I write. Postgres is wonderful but is also a heavy requirement for lots of little homelab services. I tend to run a Postgres instance for each service which takes quite a bit of memory and maintenance overhead.

In Rust-land there is DataFusion that has a SQL interface for querying and inserting data. Inserting data can be a bit messy though and seems very much not to be the focus.

DataFusion is integrated well with Delta though! Delta appears really interesting! It can use memory, filesystem, or remote storage. It supports write fairly well, including (fairly manually) the maintenance items that folks complain about with Postgres (compaction/vacuuming).

It took a day but I have a working prototype of creating a table on Minio, inserting data, and querying the table. Examples on Delta's site seem out of date and overly verbose at the same time.

I ran into a problem with my homelab Minio cluster where a single node was able to corrupt the ls of a bucket. With that node turned off ls showed the latest files, with the node running ls wouldn't list any files added in the last month. Writes were fine in either case, other than not being ls-able. A manual heal on the cluster seemed to fix it fine enough.

Thankful for a Reddit post that SmartHQ appliances need a 2.4ghz network. The SmartHQ app isn't all that helpful with it's error messages.

I've had trouble with connections from my phone with WireGuard for a while. For maybe 2 years it wasn't a big enough issue to worry about. Finally looking, it seems to be an MTU issue that winds up causing problems for some sites, like Duck Duck Go. When I had that set as my default search engine it feels like the Internet is broken.

Setting the MTU to 1420 in my router WireGuard config seems to have fixed it! It was previously at 1200... for a reason I cannot recall.

I've added some sanity checks my common Github Actions when I build Docker containers to run the -h of a tool after building the image. I've a couple times been bitten by shared lib versions across build vs runtime base images. This at least verifies that the binary is in place and works!

- name: Build

uses: docker/build-push-action@v6

with:

platforms: ${{ inputs.docker_platforms }}

context: ${{ inputs.context }}

cache-from: type=gha

cache-to: type=gha,mode=max

load: true

tags: local-build:${{ github.sha }}

push: false

- name: Check Container

if: inputs.check_command != ''

run: |

docker run local-build:${{ github.sha }} ${{ inputs.check_command }}

I'm running into this issue with my local Docker Registry where things seem corrupted after a garbage collect. I though it was how I was deleting multi-arch images, but maybe not! I'm just disabling garbage collection for now in my system.

I'd love to try out Oriole DB for the decoupled storage for running Postgres. Keeping all data on S3/Minio would ease my DB management for disks and remove any reliance on a remote filesystems for Postgres.

The current limitation of While OrioleDB tables and materialized views are stored incrementally in the S3 bucket, the history is kept forever. There is currently no mechanism to safely remove the old data. prevents me from running it fully though. I'll have to keep an eye on the dev / next release.

With 0.0.9 of declare_schema I'm starting to fail if migrations cannot be run. At the moment this tends to be room for improvement on implementation vs limitations in Postgres.

Current limitations:

- Cannot modify table constraints, as of 17, only foreign keys can be anyway

- Cannot modify indexes. I'd like to improve this but it's also super messy. Index creation can take a while. I'm not sure how I'd like to handle this so the method at the moment is now to create a new index an later drop the old one.

I'm starting to get fairly confident in my usage of it. Going forward I hope to work more on docs and examples.

Some of my projects recently failed during lockfile updates with:

error "OPENSSL_API_COMPAT expresses an impossible API compatibility level"

The lockfile updates included openssl-sys-0.9.104. I remember not really needing OpenSSL at all, leaning to use rust-tls for the most part. This was the push I needed to figure out why OpenSSL was still being included.

reqwest includes default-tls as a default feature, which seems to be native-tls a.k.a OpenSSL. Removing default features worked for my projects

reqwest = { version = "0.12.4", features = ["rustls-tls", "json"], default-features = false }

While upgrading to Postgres 17 I ran into a few problems in my setup:

- I didn't update

pg_dumpas well, so backups stopped for a few days pg_dumpfor Postgres 17 (in some conditions? at least my setup) requires ALPN with TLS.

From the release notes:

Allow TLS connections without requiring a network round-trip negotiation (Greg Stark, Heikki Linnakangas, Peter Eisentraut, Michael Paquier, Daniel Gustafsson)

This is enabled with the client-side option sslnegotiation=direct, requires ALPN, and only works on PostgreSQL 17 and later servers.

I run Traefik to proxy Postgres connections, taking advantage of TLS SNI so a single Postgres port can be opened in Traefik and it will route the connection to the appropriate Postgres instance. Traefik ... understandly... doesn't default to advertising that it supports postgresql service over TLS. This must be done explicitly.

In Traefik I was setting logs such as tls: client requested unsupported application protocols ([postgresql])

From pg_dump the log was SSL error: tlsv1 alert no application protocol "postgres"

Fixing this required configuring Traefik to explicitly say postgresql was supported.

# Dynamic configuration

[tls.options]

[tls.options.default]

alpnProtocols = ["http/1.1", "h2", "postgresql"]

This as documented, is dynamic configuration. It must go in a dynamic config file declaration, not the static. In my static config I needed to add

[providers]

[providers.file]

directory = "/local/dynamic"

watch = true

Where /local/dynamic is a dir that contains dynamic configuration. I was unable to get the alpnProtocols set with Nomad dynamic configuration. I always ran into invalid node options: string when Traefik tried to load the config from Consul. Maybe from this

Pleased again with SQLx tests while adding tests against Postgres to test migrations. Previously there wasn't any automated tests for "what does this lib pull from Postgres", I was doing that manually.

#[sqlx::test]

fn test_drop_foreign_key_constraint(pool: PgPool) {

crate::migrate_from_string(

r#"

CREATE TABLE items (id uuid NOT NULL, PRIMARY KEY(id));

CREATE TABLE test (id uuid, CONSTRAINT fk_id FOREIGN KEY(id) REFERENCES items(id))"#,

&pool,

)

.await

.expect("Setup");

let m = crate::generate_migrations_from_string(

r#"

CREATE TABLE items (id uuid NOT NULL, PRIMARY KEY(id));

CREATE TABLE test (id uuid)"#,

&pool,

)

.await

.expect("Migrate");

let alter = vec![r#"ALTER TABLE test DROP CONSTRAINT fk_id CASCADE"#];

assert_eq!(m, alter);

Really impressed with SQLx testing. Super simple to create tests that use the DB in a way that works well in dev and CI environments. I'm not using the migration feature but instead have my own setup to get the DB into the right state at the beginning of tests.

Making a pr to SQLx to add Postgres lquery arrays. This took less time than I expected to try and fix. More time was spent wrangling my various projects to use a local sqlx dependency.

Postgres ltree has a wonderful ? operator that will check an array of lquerys. I plan to use this to allow filtering multiple labels in my expense tracker.

ltree ? lquery[] → boolean

lquery[] ? ltree → boolean

Does ltree match any lquery in array?

I'm trying to figure out if I can create Service Accounts in Kanidm and get a JWT that will work with pREST. pREST can be configured to use a .well-known URL to pull a JWK. This would allow me to give a long-lived service account API key to each service and keep token generation out of my services.

It looks like not yet! But they seem to be aware of this use case.

Teable looks interesting but doesn't seems to call out what is limited without the enterprise version.

2 projects listed in This Week in Self-Hosted (27 September 2024): AirTrail and Hoarder. I don't use either, but good to see more self-host stuff adding OIDC!

Giving Mathesar a try for a "life CMS". I loved Notion databases for easily creating structured data and linking between items and have been trying various things to replace it. Maybe this will be it! I like that it's specifically Postgres (as that's most of my homelab). It's missing a good mobile interface and OIDC though.

I spent 90 minutes dehulling soybeans by hand. Wish I tried the food processor

In a couple cases I've seen my feed reader "catch up" lots of posts at once, where I thought I've missed items or things were backdated and found again. This has mostly happened with folks who seem to use Ghost (Molly White's micro posts and Support Human)

The data flow I'm using is [Site RSS] -> [Miniflux] -> [Reeder]

Getting w2z setup to support lists of strings in the CMS. First time using _hyperscript to add/remove items in a list. Working really well so far!

New version of Reeder now out. I've been using Reeder (now Reeder Classic) since ~2012 (v2.5.4 from what I can tell in old email receipts).

This new version is a redesign that doesn't seem relevant for me at least. The single timeline view isn't how I want to view feeds but I can see how that is what many people might be used to coming from social media.

It looks like Github branch rulesets allow setting a bypass for specific app integrations! This should allow my Github app to avoid making a branch, PR, and auto-merging... which would be nice eventually!

First time giving rulesets a try

I'm exploring using Github Apps for w2z instead of fine-grained personal access tokens (PATs). Replacing PATs every 90 days is a bit tedious. Eventually the app flow should give a better experience.

you should know that if the algorithm chooses you it has nothing to do with the quality or value of your work. And I mean literally nothing. The algorithm is nothing more than a capitalist predator, seeking to consume what it can, monetize it quickly, then toss aside. If you make the algorithm your audience, you get very good at creating for an audience of machines rather than humans. Creating for humans is harder, it may get you ignored by the algorithm, but your work will be better for it, and it will find an audience in time.

- Brian Koberlei via P&B

Saw Daybreak via Simon Clark and would love to give it a go at some point! The collaborative nature of the game and the reality of climate action seems really appealing.

Based on a Github issue I was able to get a collection with Zola taxonomies writing the proper TOML-frontmatter format.

collections:

- ...

fields:

- label: "Taxonomies"

name: "taxonomies"

widget: "object"

fields:

- { label: "Tags", name: "tags", widget: "list", allow_add: true}

This is written out into the frontmatter as

+++

[taxonomies]

tags = ["tag"]

+++

Anyone using DecapCMS? I gave it a try a while ago and looks like there is now Nested Collections which should now match how I have my site setup.

I guess I'll look forward to PostgreSQL 17, between better upserts and some label improvements.

The MERGE seems to take more code than I'd like, I wish ON CONFLICT didn't bloat and could have an option for ALWAYS RETURNING that would return the row even if not modified. I'd deal with the bloat if it were simple code and always returned the row.

Cool looking Rust crate registry, Cratery. OAuth login, S3 storage. Guess could setup Litestream for DB storage as well.

Last spring we found it slightly too cold to go camping when we otherwise had the chance. Maybe time to pick up a warmer sleeping bag.

I was confused when I didn't see a Vegan label on a Silk carton. I'm not really less confused after reading the FAQ.

I was able to get tracecontext passthrough to OTel working! I was missing the opentelemetry_sdk::trace::TracerProvider which pulls in the infomation to help build the OTel data that gets sent to collectors.

A new sqlparser release pushed out my changes for supporting declare-schema! I released 0.0.1 but haven't gone through and updated my services yet.

In a month or so of using the dev version of declare-schema in my services I've had a good time! Since I wrote it with my mental model for services in mind, it works as I want it to! The library itself would probably wind up with significant data loss for others.

Interesting to see Rust Otel discussing the friction for (tokio)-tracing. Tracing with DataDog was really convenient. I always wanted to get our shop moved to Otel to eventually be able to move away from DataDog though, partially due to pricing, partially due to more adoption of Google Cloud and not wanting to ship data out just for tracing.

I lean towards wanting tokio-tracing to be a/the supported case. I haven't found a solution with Otel that I really like. The initial setup has always seemed tricky, and with a cross-cutting concern like tracing, adopting Otel seems heavyweight. A single ecosystem (Otel) across language stacks is super appealing. At this point I'm mostly writing Rust things though so that isn't a huge selling point for me.

I do finally have a tracing setup in a lib that is working well, but haven't gotten distributed tracing going yet. I'm fine adopting Otel for wire formats but I'd like to keep tracing in my app. I've yet to find a good way to thread W3C tracecontext into Otel output.

Works for me to try cleaning out a Docker registry: Docker Registry Cleaner. Keeps the last N images of each repo as determined by a label on the image.

On each deploy to my homelab I set a label on the image to be used so this cleans up images I'm no longer using.

Super alpha-y state... will likely result in data loss!

I think I've always had a pleasant time upgrading my NixOS boxes. For my homelab servers

sudo nix-channel --add https://channels.nixos.org/nixos-24.05 nixos

sudo nixos-rebuild switch --upgrade

sudo reboot

Has "just worked". Keeping most of my lab stuff in Nomad helps with this as well as there isn't much that will change at the OS level, mostly Nomad, Consul, and Vault.

Charles Schwab seems to fall into a frustrating trap. An ACH transfer can be "complete" but there is still more to do (clear).

You Keep Using That Word, I Do Not Think It Means What You Think It Means

Worked on a PR for sqlparser to struct-ify some things. Part of making a SQL create table declarative way to generate SQL migrations at runtime. I had a version in Erlang before and enjoyed it enough that I want it in Rust.

sqlmo does part of the migration generation piece and I'm working to add some pieces there as well.

I missed a release of sqlparser though so the embed-able version of my lib will need to wait for merge/release before I can push my version to crates.io. The bin version of it will be something like the atlas OSS tool, but without any cloud component.

At some point I want to try Wired Elements for styling of personal projects to help present the roughness for which I usually leave my projects...

Definitely caught off guard with readTimeout in Traefik. Upping that fixed frequently-dying Postgres connections though!

DNSimple sent me an email with the subject:

asdf

and the body:

asdf

I assume someone there clicked something too soon.

Seeing more and more Github repos with renovate.json files as folks switch from Dependabot to Renovate. The app makes setup super easy. I'm pleased with it so far for the ~10 repos I've moved over.

I never really followed many folks on Nostr but I did at least update my Nostr -> RSS bridge to linkify URLs. A step towards embedding appropriate image/video tags in the RSS feed when things look like images/videos.

There is an open PR to improve Docker registry garbage collection. It helps clean up multi-arch images, which I tend to pull a bunch.

Giving it a try with a personal build it wound up reducing my registry size by about 50%!

In today's "Solving Problems I Create For Myself" news:

I updated my `docker-prefetch-image daemon to attempt pulling from alternative Docker repositories in the event an image pull fails.

This fixes the case where updating my Docker Repository infrastructure prevents pulling/running new Docker images. Now the prefetching will attempt to pull from my local repository and fall back to Docker Hub, then tag the image as if it came from my repository.

The Miniflux option on feeds ‘Disable HTTP/2 to avoid fingerprinting’ seems to fix any feeds that seem to be blocked (maybe by Cloudflare?).

Having an EV charger at vacation house is such a quality-of-life improvement. We didn’t seek out a house with a charger, but now I think we always will.

So I don't forget...

As seen on Reddit ...:

Don't forget to set the viewport when starting a new site

<meta name="viewport" content="width=device-width, initial-scale=1.0">

By far the best vegan meatballs we've made have been the It Doesn't Taste Like Chicken Vegan Italian Meatballs. Previous recipes we've tried have been black bean based. They tend not to hold together well enough. These ones don't fall apart!

I recently tried running OpenLLM in my homelab. It was super nice to setup and run but pretty poor performance running models on CPU. A GGUF model on HF was running pretty quickly but OpenLLM doesn't seem to support GGUF.

Running text-generation-webui with a Mistral GGUF model has been really nice. The performance on an AMD Ryzen 3 5300U is super usable!